December 28, 2019

Thoughts about Computer Benchmark Testing

Unlike vehicles whose specifications include a horsepower rating, computers don’t have a single number that indicates how powerful they are. True, horsepower (what an antiquated term that is) isn’t really all that relevant, because that number alone doesn’t tell the whole story. The same engine that makes a light vehicle a rocket might struggle in a larger, heavier vehicle. And even if the vehicles weighed the same, performance might be different because of a number of other factors, such as transmission, aerodynamics, tires, and so on. That’s why car magazines measure and report on various aspects and capabilities of a vehicle: acceleration, braking, roadholding, as well as subjective impressions such as how effortless and pleasant a vehicle drives and handles.

So how is performance measured in computers, and why is “performance” relevant in the first place? To answer that, we first need to look at why performance is needed and what it does in both vehicles and in cars. In vehicles, performance clearly means how effortlessly the engine moves the car or truck. Step on the gas, and the vehicles instantly responds. And it has enough performance to handle every situation. That said, absolute peak performance may or may not matter. On the racetrack it does matter, but what constitutes enough performance for tooling through town and everyday driving is a different matter altogether. And that’s pretty much the same for computers.

The kind of performance that matters

The kind of performance that matters most in computers is that which enables the system to respond quickly and effortlessly to the user’s commands. And just like in vehicles, very high peak performance may or may not matter.

If a system is used for clearly defined tasks, all that is needed is enough performance to handle those, and everything above that is wasted. If a system may be used for a variety of tasks, there must be enough performance to reasonably handle everything it may encounter, within reason. And if a system’s task must be able to handle very complex tasks and very heavy loads, it must have enough peak performance to do that task as well as is possible.

What affects performance?

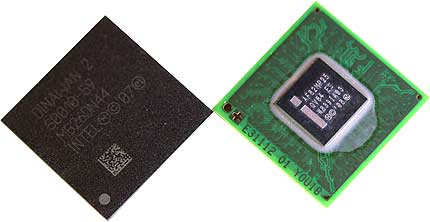

So how do we know if a system can handle a certain load? In combustion engines, the number of cylinder matters, even though thanks to turbocharging and computerized engine control that is no longer as relevant as it once was. Still, you see no vehicles with just one or two cylinders except perhaps motorcycles. Four was/is the norm for average vehicles, six is better, and eight or even twelve means power and high performance. And there, it’s much the same in computers: the number of computing cores, the cylinders of a CPU, often suggests its power. Quad-core is better than dual-core, octa-core is a frequently used suggestion of high performance, and very high performance systems may even have more.

But the number of cores is not all that matters. After all, what counts in computing is how many instructions can be processed in a given time. And that’s where clock speed comes in. That’s measured in megahertz or gigahertz per seconds, millions or billion cycles per second. More is better, but the number of cycles doesn’t tell the whole story. That’s because not all instructions are the same. In the world of computers, there are simple instructions that perform just basic tasks, and there are complex instructions that perform much more in just one cycle. Which is better? The automotive equivalent may be not the number of cylinders, but how big each cylinder is and how much punch it generates with each stroke. For many years Americans valued big 8-cylinder motors, whereas European and Japanese vehicle manufacturers favored small, efficient 4-cylinder designs.

RISC vs CISC

In computers, the term RISC means reduced instruction set computer and CISC complex instruction set computer. The battle between the two philosophies began decades ago, and it carries on today. Intel makes CISC-based computer chips that drive most PCs in the world. On the other side is ARM (Advanced RISC Machine) that is used in virtually all smartphones and small tablets.

What that means is that computing performance depends on the number of computing cores, the type and complexity of the cores, the number of instructions that can be completed in a second, and the type and complexity of those instructions.

But that is far from all. It also depends on numerous other variables. It matters on the operating system that uses the result of performed instructions and converts it to something that is valuable for the user. That can be as simple as making characters appear on the display when the user types on the keyboard, or as complex as computing advanced 3D operations or shading.

Performance depends not just on the processor, but also on the various supporting systems that the processor needs to do its work. Data must be stored and retrieved to and from memory and/or mass storage. How, and how well, that is done has a big impact on performance. The overall “architecture” of a system greatly matters. How efficient is it? Are there bottlenecks that slow things down?

The costs of performance

And then there is the amount of energy the computer consumes to get its work done. That’s the equivalent of how much gas a combustion engine burns to do its job. In vehicles, more performance generally means more gas. There are tricks to get the most peak power out of each gallon or to stretch each gallon as much as possible.

In computers it’s electricity. By and large, the more electricity, the more performance. And just like in vehicles and their combustion engine, efficiency matters. Technology determines how much useful work we can squeeze out of each gallon of gas and out of each kilowatt-hour of electricity. Heat is a byproduct of converting both gasoline and electricity into useful (for us) performance. Minimizing and managing that waste heat is key to both maximum power generation as well as efficiency if the process.

So what does all of that relate to “benchmarking”?

Benchmarking represents an effort to provide an idea of how well a computer performs compared to other computers. But how does one do that? With vehicles, it’s relatively simple. There are established and generally agreed on measures of performance. While “horsepower” itself, or the number of cylinders, means relatively little, there is the time a vehicle needs to accelerate from 0 to 60 miles per hour, or reach 1/4 mile from a standing start. For efficiency, the number of miles one can drive per gallon matters, and that is measured for various use scenarios.

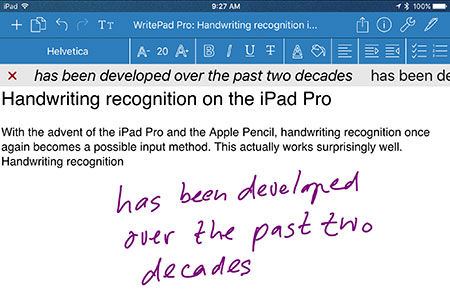

No such simple and well-defined measurement standards exist for computers. When, together with a partner, I started Pen Computing Magazine over a quarter of a century ago, we created our own “benchmark” test when the first little Windows CE-based clamshell computers came along. Our benchmark consisted of a number of things a user of such a device might possibly do in a given workday. The less overall time a device needed to perform all of those tasks, the more powerful it was, and the easier and more pleasant it was to use.

And that is the purpose of a benchmark, to see how long it takes to complete tasks for which we use a computer. But, lacking such generally accepted concepts as horsepower, 0-60 and 1/4 mile acceleration and gas mileage, what should a benchmark measure?

What should a benchmark measure?

The answer is, as so often, “it depends.” Should the benchmark be a measure of overall, big picture performance? Or should it measure performance in particular areas or with a particular type of work or a particular piece of software?

Once that has been decided, what else matters? We’d rate being able to compare results with as many other tested systems as very important, because no performance benchmark result is an island. It only means something in comparison with other results.

And that’s where one often runs into problems. That’s because benchmark software evolves along with the hardware it is designed to test. That’s a good thing, because older benchmark software may not test, or know how to test, new features and technologies. That can be a problem because benchmark results conducted with different versions of a particular type of benchmark may no longer be comparable.

But version differences are not the only pitfall. Weighting is as well. Most performance benchmarks test various subsystems and then assign an importance, a “weight,” to each when calculating the overall performance value.

Here, again, weighting may change over time. One glaring example is the change in weighting when mass storage went from rotating disks to solid state disk and then to much faster solid-state disk (PCIe NVMe). Storage benchmark results increased so dramatically that overall benchmark results would be distorted unless weighting of the disk subsystem was re-evaluated and changed.

Overall system performance

The big question then becomes what kind of benchmark would present the most reliable indicator of overall system performance? That would be one that not only shows how a system scores against current era competition, but also to older systems with older technologies. One that tests thoroughly enough to truly present a good measure of overall performance. One that allows to easily spot weakness or strengths in the various components of a system.

But what if one size doesn’t fit all? If one wants to know how well a system performs in a particular area like, for example, advanced graphics? And within that sub-section, how well the design performs with particular graphics standards, and then even how it works with different revs of such standards? That’s where it can quickly get complex and very involved.

Consider that raw performance isn’t everything. A motor with so and so much horsepower may run 0-60 and the 1/4-mile in so and so much time. But put that same motor in a much heavier car, and the vehicle would run 0-60 and the 1/4-mile much slower. Computers aren’t weighed down by weight, but by the operating system overhead. Recall that the earliest PCs often felt very quick and responsive whereas today’s systems with technology that’s hundreds or thousands of times as powerful can be sluggish. Which means that OS software matters, too, and its impact is rarely measured in benchmark results.

Finally, in the best of all worlds, there’d be benchmarks that could measure performance across operating systems (like Windows and Android) and processor technology (like Intel x86 and ARM). That does not truly and reliably exist.

How we measure performance

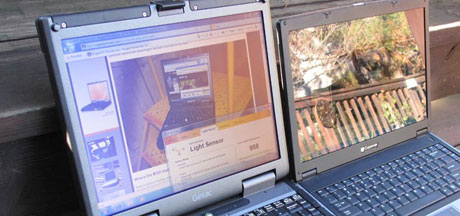

Which brings me the way we benchmark at our operation, RuggedPCReview.com. As the name indicates, we examine, analyze, test and report on rugged computers. Reliability, durability and being able to hold up under extreme conditions matter most in this field. Performance matters also, but not quite as much as concept, design, materials, and build. Rugged computers have a much longer life cycle than consumer electronics, and it is often power consumption, heat generation, long term availability, and special “embedded” status that rate highest when evaluating such products. But it is, of course, still good to know how well a product performs.

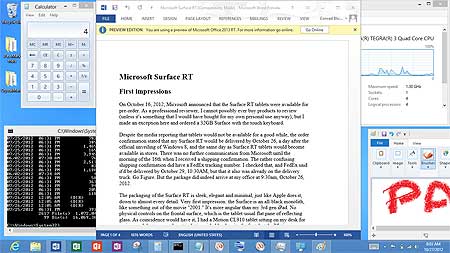

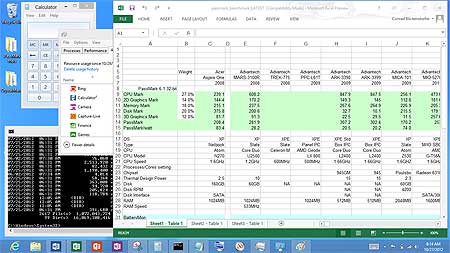

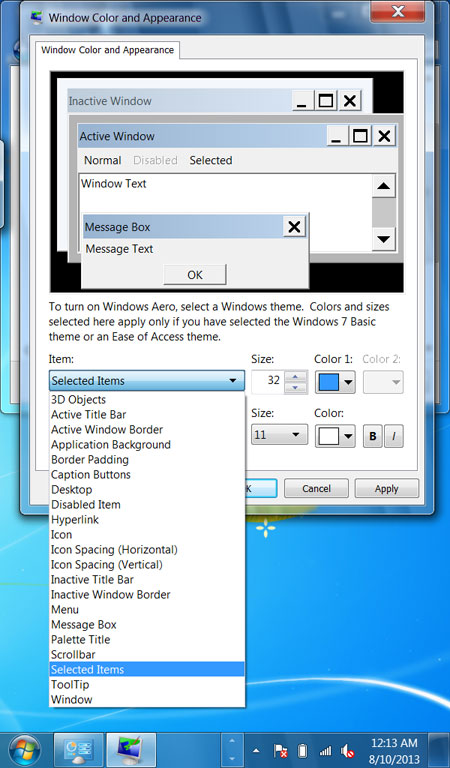

So we chose two complete-system benchmarks that each give all parts of a computer a good workout. We decided to standardize on two benchmarks that would not become obsolete quickly. And this approach has served us well for nearly a decade and a half. As a result, we have benchmarks of hundreds of systems that are all still directly comparable.

A few years ago, we did add a third, a newer version of PassMark, mostly because our initial standard version no longer reliably ran on some late model products.

Do keep all of that in mind when you peruse benchmarks. Concentrate on what matters to you and your operation. If possible, use the same benchmark for all of your testing or evaluation. It makes actual, true comparison so much easier.

Posted by conradb212 at 7:50 PM

October 1, 2019

How the rugged PC "drop spec" just became different

The Department of Defense significantly expands drop test definitions and procedures in the new MIL-STD-810H Test Method Standard

You wouldn't know it from looking at almost all of the published ruggedness testing results, but the good old DOD MIL-STD-810G was replaced by the MIL-STD-810H in January 2019. And if you thought the old MIL-STD-810G was massive in size, the new one is bigger yet. While the old MIL-STD-810G document was 804 pages, the new one has 1,089. That's 285 extra pages of testing procedures.

The new standard brings quite a few changes in ruggedness testing. In this article I'll take a first look at one of the marquee tests as far as rugged mobile computing equipment goes, the transit drop test. It was described in Method 516.6 Procedure IV in the old MIL-STD-810G, and now it is under Method 516.8 Procedure IV in the new MIL-STD-810H. The drop section has grown from two to six pages, and there are some interesting changes.

Drop surface: Steel over concrete

The basic approach to drop testing remains the same. Items must still be tested in the same configuration that is actually "used in transportation, handling, or a combat situation." What has changed is how testing is done. Procedures are described in more detail.

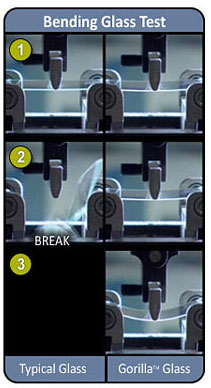

Under the old MIL-STD-810G, lightweight items such as mobile computing equipment had to be dropped onto two-inch plywood backed by concrete, and only really heavy gear weighing over 1,000 pounds directly onto concrete. That has changed. The DOD was apparently concerned about repeatability of results, and surface configuration can have a substantial impact on that. The DOD wanted to test the most severe damage potential, that of "impact with a non-yielding mass that absorbs minimal energy."

Since plywood hardness can change and affect results, under MIL-STD-810H the default impact surface is now steel plate over concrete. For mobile computing gear purposes, the steel plate must be at least an inch thick, have a Brinell hardness of 200 or more, the concrete underneath must be reinforced, have a minimum compressive strength of 2500 psi, and the steel must be bonded or bolted onto the concrete to form a uniform, rigid structure.

This seems drastic. Clearly, what the DOD is concerned here is that it is the dropped item that must absorb the impact and not the surface that something is dropped on, hence the steel instead of plywood. Fortunately, the DOD realizes that there is a big difference between dropping light and very heavy gear, and also what something might fall on. On an aircraft carrier, for example, it would almost always be steel. Out there in the field, it's more likely asphalt or dirt.

So the supporting notes allow that "concrete or 2-inch plywood backed by concrete may be selected if (a) a concrete or wood surface is representative of the most severe conditions or (b) it can be shown that the compressive strength of the impact surface is greater than that of the test item impact points." Whew. But steel is actually a very good idea.

Different drop scenarios

The MIL-STD-810H recognizes that not all drops are equal. The "transit drop" in the old MIL-STD-810G seemed to primarily assume that something falls off a truck when loading and unloading equipment. This really didn't have much relevance to the most likely drop scenario in mobile computing -- dropping a tablet or laptop while using it in the field. So whereas the old standard only had one drop scenario -- transit drop -- the new MIL-STD-810H standard has three.

There is the "logistic transit drop test" that states drop conditions for "non-tactical logistical transport" i.e. dropping things off the proverbial truck. The "tactical transport drop test" includes scenarios associated with "tactical transport beyond the theatre storage area. And, finally, there's a "severe tactical transport drop test" where items pass as long as they do "not explode, burn, spread propellant or explosive material as a result of dropping, dragging or removal of the item for disposal."

Now what does all that mean?

The "logistic transit drop" test is, in essence, the same as the old MIL-STD-810G transit drop test. Items where the largest dimension is no more than 36 inches (i.e. all mobile computing gear) must pass 48-inch drops on "each face, edge, and corner; total of 26 drops." These 26 drops may be among no more than five test items.

The "tactical transport drop" offers five scenarios (ship, unpackaged, packaged, helicopter, and parachute), where only one scenario applies to conventional ruggedness testing of mobile computing gear "unpackaged handling, infantry and man-carried equipment." There, the drop height is five feet. And instead of 26 drops, there are five standard drop orientations (drop to flat bottom, left end, right end, bottom right edge at 45 degrees, and top left end corner at 45 degrees), with each item exposed to no more than two drops.

The "severe tactical transport drop" test really only applies to items being dropped from significant heights, like helicopters, aircraft, cranes and such. There, the drop height starts at 7 feet (falling out of a helicopter, unpackaged) to 82 feet (shipboard loading onto an aircraft carrier). This rarely applies for the purposes of rugged mobile computers, but for bragging rights, some makers of rugged computing gear will undoubtedly perform those tests (you know who you are!).

Guidance systems

If you've ever wondered how one can drop an item so that it lands exactly on a mandated edge or corner, the MIL-STD-810H offers help and a suggestion. "Guidance systems which do not reduce the impact velocity may be employed to ensure correct impact angle, however, guidance shall be eliminated at a sufficient height above the impact surface to allow unimpeded fall and rebound." We've never seen any such system, and it should be interesting if someone will come up with such a drop test guidance mechanism.

Evaluating results

Now how does one perform, document and evaluate the drops? That has always been the weakest point in drop testing, and the new MIL-STD-810H brings no change.

It's simply recommended to "periodically" examine an item visually and operationally during the testing, to help in the follow-up evaluation. It's recommended to document the impact point and/or surface of each drop and any obvious damage. And that's it.

So although the "drop test" section in the new MIL-STD-810H has grown considerably in length, new categories have been added, and testing methods mandated in more detail, much remains quite vague. That's to be expected of a testing procedure that covers a vast variety of different items that may be packaged, unpackaged, and ranging in weight from very light to thousands of pounds. And so the section ends with "conduct an operational checkout in accordance with the approved test plan."

My recommendations

What do we learn from all this? In essence, that it all depends, even under the more detailed guidelines of the new MIL-STD-810H.

What's important with mobile computing gear is that it still works after you drop it. Whether it's operating during the test or not used to matter back when mobile computers had hard disks. With solid state storage it functionally really doesn't matter whether testing is done with the system on or not.

I suggest doing the tests with the unit operating. That way it's immediately obvious if a drop killed the unit or not. And leaving it on alleviates the need to start it up after every drop.

I would not split the mandated number and types of drops on five units. That makes no sense. Instead, I would do all mandated drops on the same unit, but do all those drops on five successive units. All five must pass, i.e. still work after all drops. That way, one can be reasonably sure that results were not a fluke.

As far as the surface goes, most units will fall on carpet or wood indoors, and natural surfaces all the way to concrete outdoors. Using the suggested steel over concrete shows you're taking the testing serious. Using plywood is a bit of a cop-out because it's much softer and less likely to damage a unit. And the hardness of plywood varies.

Doing the 5-foot "tactical transport drop" test definitely gets extra credit. When using a handheld computer as a phone it'll fall from five or six feet. And even tablets can easily fall from more than four feet. Besides, the "tactical transport drop test" for "infantry and man-carried equipment" (presumably the DOD means women-carried as well) much better describes the real world drop scenarios of mobile computing gear.

Passing the "severe tactical transport drop" test that starts at seven foot drops gets extra credit.

So hit the books and study the pertaining sections of the new MIL-STD-810H. You don't want to be the last to switch. -- Conrad H. Blickenstorfer, September 2019

Posted by conradb212 at 6:06 PM

July 6, 2019

The uneven performance of cameras in rugged handhelds and tablets

Recently I ran the usual set of integrated camera test pictures for our RuggedPCReview.com product testing lab with four devices all at once. That meant taking two pictures each of the 20 or so test settings around our offices in East Tennessee. The settings represent some tasks that users of rugged handhelds and tablets might do on the job. Examples are meter reading, capturing information from accessible and not-so-accessible labels, markings, or instructions, and so on. For a splash of color and to test close-up performance we also include pictures of flowers and greenery.

The reason why we take two pictures of each subject is because, on the job, one doesn't always have time to carefully set up a subject and baby the shot. It's very much point-and-shoot. On the job one might take two shots of whatever information is to be captured, just to make sure. So we do that, too. That cuts the possibility of getting a lousy shot in half. And lousy shots are still possible, even with the latest cameras and imagers.

Back in the lab, we examine the 20 or so pairs of pictures and select the better of each pair. We then pick nine representative shots and arrange them in a 3 x 3 picture image compilation. We save that compilation full-size as well as down-sampled to fit into our web page templates, both at 72dpi and 144dpi.This way, viewers can click on the screen image to load the full-size compilation and, if so desired, download that onto their computer for closer examination.

Cameras integrated into mobile computers aren't new. They've been around for two decades or more. In fact, rugged handheld computers and tablets had cameras even before cameras became an integral (and many say the most important) part of every smartphone. That's because rugged mobile computers are, in essence, data collection devices, and image data is part of that. Unfortunately, early such integrated cameras were often quite useless. Many were terrible and in no way up to the jobs they were supposed to do.

We've pointed this out again and again over the years — first in Pen Computing Magazine and now in RuggedPCReview.com, and heard all the reasons and excuses why integrated cameras weren’t any better. It took the iPhone and then the global smartphone revolution to demonstrate that tiny cameras in very small devices could be not only acceptable, but very good. So good, in fact, that smartphone cameras have replaced the dedicated point & shoot camera market. And so good that here at RuggedPCReview.com even for product photography we've been using smartphones and no longer dedicated cameras for the last three years.

Unfortunately, while smartphone cameras went from very good to excellent to downright stunning, such progress was slow in translating into similar improvements in integrated cameras. Cameras built into rugged mobile devices have become much better thanks to the global proliferation of smartphones and tablet technology. Better imagers are available at lower costs, and that means that we're now seeing SOME rugged devices with very decent cameras. Decent, but, with some notable exceptions, certainly not stunning like in the better smartphones.

Why is that? And why does this problem persist? We don't know. The primary excuses for mediocre integrated camera performance used to be size, cost, and lack of OS support. That doesn't wash anymore. If sliver-thin smartphones can take terrific pictures, it can't be size. Imager cost, likewise, has come way down. But how about operating system support? That may be the most likely reason.

It has long baffled us why cameras integrated into Windows tablets perform nearly as well as imager specifications suggest. By far the most likely culprit there is the truly awful generic Windows Camera app. Yes, there's the ever-present driver support issue, and supporting all sorts of different imagers in all sorts of different computer hardware isn't easy. But even that cannot justify the awfulness of the Windows Camera app that usually lacks, well, just about everything. The feeling we often get when testing integrated cameras is that the imager could do much better, but not when it has to deal with the stark, ultra-basic Windows Camera app that supports almost nothing you'd expect from a camera.

Compare the imaging wizardry that can be done with today's smartphones with the sad nothingness of the Windows Camera app. The gulf couldn't be larger. Things are considerably better on the Android device side, because Android was designed from the start as a smartphone OS. And that included decent camera software. Android, of course, also has to deal with all sorts of different imagers and hardware, but there's the economy of scale. Android is absolutely dominant in smartphones and that means literally billions of devices, and thus massive software developer support. As a result, rugged Android devices almost always outperform rugged Windows-based devices in imaging operation and quality. By a considerable margin.

What's the solution? We don't know. Maybe developing a decent camera app simply isn't economically feasible for the relatively low production runs even of popular rugged devices. Maybe the assumption is that almost every user will have a smartphone in their pocket anyway, and use that for serious photography. But then why even bother with putting integrated cameras into devices? Are they just a checkbox item for government requests for proposals?

But how did we make out with testing those four devices? For the most part, the resulting pictures were much better than what was possible in the past. Some of them were even excellent. And each device was capable of capturing images and video good enough for the job of documenting project-related information.

That said, the one-size-fits all nature of the generic Windows and Android camera apps can be very frustrating when it's so obvious that the software is holding back the hardware. If the software cannot take full advantage of imager specs, when the lack of settings and functionality results in blurry pictures because the software can't properly focus or adjust for lighting, movement, contrast or type of light, then it hardly makes any sense to use the camera at all.

It pains me to be so negative about this, especially since some progress has been made. But it just is not enough. At a time when smartphones offer 4K video at 60 frames per second, 1080p/30fps simply isn't enough. Sluggish, lagging operation isn’t acceptable. And it makes no sense for camera software to not even support the full resolution of the hardware, or only in certain aspect ratios. It was terrible software that killed off the dedicated point & shoot camera market when smartphones came along, and it is terrible software that continues to make so many integrated cameras essentially useless.

This really needs to be addressed. As is, Android is way ahead of Windows in making good use of imaging hardware built into rugged mobile computing gear. Bill Gates recently mentioned that one of his greatest regrets is not to have taken the mobile space seriously and let Android become the (non-Apple) mobile systems OS winner. It seems Microsoft still isn’t taking the mobile space seriously. — C. Blickenstorfer, July 2019

Posted by conradb212 at 3:22 PM

May 17, 2019

Android contemplations 2019

In this article I’ll present some of my thoughts on Android, where it’s been, and where it’s going. I’ll discuss both my personal experiences with Android as well as my observations as Editor-in-Chief of RuggedPCReview.com.

Although my primary phone has been an iPhone ever since Steve Jobs introduced the first one, and although my primary tablet is an iPad, and also has been ever since the iPad was introduced to a snickering media that mocked the tablet as just an overgrown phone without much purpose, I am inherently operating platform agnostic.

I do almost all of my production work on an iMac27 because, in my opinion, there's nothing like a Mac for stress-free work, backup, and migration. But I also use Windows desktops because Windows continues to be, by far, the dominant desktop and laptop OS. And because all laptops and many tablets in the rugged mobile computing industry that I cover use Windows. I have a Linux box because I want to stay more or less up-to-date on an OS that so much is built on, even if few know what all is using Linux in the background. I use Android because Android is, by far, the dominant OS on smartphones, and it's a major factor in tablets (and Android is built on Linux).

Over the course of my career I've seen lots of operating systems come and go. The Palm OS once ruled the mobile space. Microsoft had Windows CE in its various incarnations. There was EPOC that later became Symbian. Android actually predated the iOS, though the first commercial version didn't appear until 2008. In 2009, Motorola's Droid commercials alerted the public to Android, but few would have guessed its impending dominance. By 2010, 100,000 Android phones were activated every day, but in the rugged space Android remained largely unknown. I did a presentation on trends and concepts in mobile computing at a conference in Sweden where I alerted to the presence of Android and its future potential in rugged mobile devices.

In August 2010, I bought an Augen GenTouch78 for US$149.95 at K-Mart to educate myself in more detail about the potential of Android in tablets and presenting my findings on RuggedPCReview.com (see here). The little 7-inch Augen tablet, one of the very few Android tablets available back then, ran Android 2.1 "Eclair" and showed promise, but it didn't exactly impress. So while Android had already gained a solid foothold behind iOS and Symbian in smartphones, its future in tablets still seemed uncertain.

Android's fortunes in rugged tablets appeared to change in 2011 when, to great fanfare, Panasonic introduced the Toughpad A1 at Dallas Cowboy Stadium in Arlington, Texas. Getac followed in 2012 with the Z710, and Xplore in 2013 with the RangerX. None of these lived up to sales expectations. In light of the massive success of Android in smartphones, the problems Android had in establishing itself in tablets seemed hard to understand. In hindsight, it may have been a combination of Google's almost 100% concentration on phones and Microsoft's rediscovery of touch and tablets with the introduction of Windows 8 and, from 2012 on, the Microsoft Surface tablets.

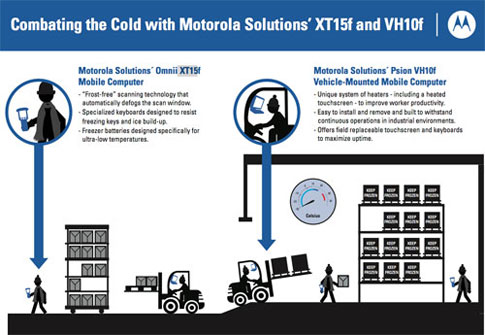

In the ruggedized handheld space, driven by the success of Android-based smartphones, the situation began to change. After having been beaten to the punch by smaller, nimbler companies such as the Handheld Group, Unitech and Winmate, both Motorola Solutions and Honeywell began placing emphasis on Android. Both announced their commitment to Android late 2013, albeit initially by simply making available some of their devices either with Windows Mobile or with Android. For the next several years, offering both Windows Embedded Handheld (the renamed Windows Mobile) versions of handhelds became the norm. It was a (not very cost-effective) case of hedging bets and offending no one.

By 2016 it became obvious that Microsoft had either lost the mobile war or had simply lost interest in it. The situation had been quite untenable for several years where Microsoft had essentially abandoned Windows Embedded Handheld and concentrated on Windows Phone instead. Windows Phone, later strangely renamed to Windows Mobile, didn't go anywhere either, and so Microsoft called it quits.

It was time for me to get another personal Android device to see how the platform had developed on the tablet side. My choice was the Dell Venue 8 7000 Series, a gorgeous 8.4-inch tablet running Android 5.1. Beginning with its super-sleek design, its wonderful 2,560 x 1,600 pixel OLED display, its four (!) cameras, and its excellent battery life, the Dell tablet was simply terrific (see my coverage in RuggedPCReview.com). And Android certainly had come a very long way. Dell, however, didn't stay long in the Android tablet space. The fact that I was able to get it at half of its original price should have been an indication.

Around that time I also began writing Android user manuals for some clients. This made me realize one major weakness of Android. Its user interface, while suitable for simple phone tasks, is hopelessly convoluted and everything changes with every new rev. Features, functions, screens and menus are forever haphazardly rearranged and renamed, making Android needlessly frustrating and complex to use. Adult supervision is needed to rein in that endless fiddling around with the Android UI. And with every new rev, Google's presence becomes more heavy-handed in Android. "Neutral" apps are being replaced with Google apps, and there is ever more pressure to use Google accounts and Google services. Google was, and is, taking over.

When my Dell tablet gave up the ghost a few months ago, I began looking for another personal Android device. This time, for a variety of reasons, I wanted a phone and not a tablet. This would give me a chance to get a very recent version of Android, and also the opportunity to see where your average consumer smartphone stands compared to rugged Android devices. While I was at it, I felt this was also a good time to get to know one of the rapidly growing Chinese smartphone giants, namely Huawei (Wowie? Wha-Way? Whaa-wee? Huu-Ahh-Way?).

So in May 2019 (just a few days before Google's sudden termination of its relationship with Huawei) I purchased a Huawei P30 Lite smartphone, a lower-cost version of the company's P30 Pro. Just like the iPhone, Huawei's latest phones all look almost identical, but there are fairly substantial differences as well. Screen sizes vary, as do cameras, materials, batteries and technologies. My (unlocked) P30 Lite cost just $300. It's between the iPhone XS and XS Max in size, but is both lighter and a bit thinner than even the smaller iPhone XS.

The P30 Lite runs Android 9.0.1, close to bleeding-edge. The 6.15-inch IPS LCD display is bright enough (450 nits as measured) and its 1080 x 2312 pixel resolution translates into a stellar 415ppi. The phone comes with 4GB of RAM, 128GB of internal storage, and a micro SDXC slot that can handle up to 1TB cards.

There are four cameras: a 24MP f/2.0 selfie camera, a 24MP f/1.8 main camera (and EU versions even a 48MP camera!), an 8MP ultrawide camera, and a 2MP "depth sensor" camera for adding bokeh effects. Despite the very high resolution, video is limited to 1080p/30fps, probably so as not to compete with the higher-end models. There are two nano-SIM slots, but one shares space with the micro-SD card, so you can't have both.

There's a reversible USB Type-C port, a separate standard 3.5mm audio jack, a non-removable 3,340mAH Li-Polymer battery, 802.11ac WiFi, NFC, and Bluetooth 4.2. The whole thing is powered by an octa-core Hisilicon Kirin 710 chipset (4 x 2.2GHz Cortex-A73 and 4 x 1.7GHz Cortex-A53) made by Huawei itself and running up to 2.2GHz. The body looks quite high-rent, though it's plastic and not metal like the higher level Huawei P30 phones.

There are no references to ruggedness at all, and the P30 Lite comes with a transparent protective boot and also a screen protector. So presumably no splashing in the water or rough handling. The phone supports face recognition and also has a fingerprint scanner in the back. That may sound an odd placement, but it works great.

Since this is pretty much a bargain brand-name phone and doesn't have the same high-powered chipset as the top-of-the-line models, how well does it perform? Very well, actually. In the AnTuTu and PassMark Mobile benchmarks it scored a good 50% faster than any of the recent rugged Android handhelds and tablets we tested. Just another example of how consumer tech remains vexingly ahead of anything longer-lifecycle/lower sales volume rugged devices can do.

So the issue remains. Consumer tech is fast, advanced, light, glossy and, unless you go ultra-premium, cheap. There's a protective case for anything and everything. If a company buys a thousand devices like this Huawei P30 Lite, it'll cost $300,000. If it goes for dedicated rugged devices, it may cost a million. Or not (the rather rugged and well-sealed Kyocera DuraForce PRO 2 we recently tested ran just $400). Then it comes down to issues like service, warranties, ease of replacement, and so on. Personally, I very much believe in spending money for high-quality tools made for the job, but it's easy to see the lure of consumer tech.

So where will things go from here? Android will almost certainly maintain its dominance in smartphones. Unless Microsoft pulls an unexpected rabbit out of its corporate hat, pretty much all enterprise and industrial handhelds will be Android for the foreseeable future. I fear that Google’s increasingly heavy presence all through Android will become a more pressing problem. As will the lack of a clean, compelling “professional†version of Android (the current Android AOSP, Android for Work, etc., are insufficient).

With tablets it’s more difficult to tell Android’s future trajectory, mostly because of definitions of what counts as a tablet. I’d guess 1/3 iOS, 1/3 Android, and 1/3 Windows, but many statistics suggest otherwise. statista.com, for example, sees it as 58% iOS, 26% Android, and 16% Windows for worldwide tablet OS share in 2018. Android’s future in tablets may also depend on who will come out on top in Google’s current internal squabble between Android and the Chrome OS.

Version fragmentation remains a serious weakness in Android. And since a lot of devices cannot be updated and version support ends quickly (Version 6, Marshmallow, is already no longer supported by Google), the roughly annual new major version release obsoletes Android hardware very quickly. That’s no problem for consumer tech. But is definitely an issue for vertical and industrial markets where manufacturers need to decide what to build and support, and customers in what and whom to place their trust.

Posted by conradb212 at 6:29 PM

March 25, 2019

The Drone in Your Future

Drones have made tremendous progress and will likely become part of many jobs.

In this article I will provide a brief overview of drone technology, and the application and impact it may have on field operations. Because, believe it or not, drones may well become part of your job.

Not too terribly long ago I came across an article and an accompanying YouTube video by a man who had attached a small digital camera to a remote controlled toy helicopter. The contraption worked well enough to yield video from high above the trees around his house. It was a fun project, the man felt, and there might actually be practical application for such a thing. But most who watched the video probably dismissed it as just a weird hobbyist science project.

What the man may or may not have known was that he may well have cobbled together one of the first "drones" as we know them today. I say "as we know them today" because unmanned aircraft that could shoot pictures have been used as far back as the Spanish American War in the late 1800s, back then mounted to a kite. The actual term "drone" apparently dates back to the mid-1930s when unmanned remote-controlled vehicles made the buzzing sound of hive-controlled male bees, or "drones."

Drones as we know them today are of much more recent vintage. They came about when "action cameras" like GoPros became small and light enough to be mounted onto inexpensive little helicopters, usually with four rotors. Available both as cheap toys and also as more serious flying cameras, drones quickly gained a reputation as intrusive pests used by paparazzis and other intruders of privacy, and also as a danger to real aircraft.

That has gotten to a point where the use of drones is more and more regulated, and sadly in a haphazard and uneven way that frustratingly varies from place to place.

So let's see where drones are today and where they may be headed. Yes, you can pick up a cheap drone at every Walmart or similar store for very little money. Those do take video and they do fly, but they don't do either well. As a result, most quickly crash or get lost. They may be kids toys, but since most lack any degree of stabilizing electronics, they are actually difficult to control and fly.

Those willing to spend more money will find a rapidly increasing degree of sophistication. Here at RuggedPCReview.com we invested in one of the latest drones of market leader DJI (which commands almost 3/4 of the market). The Mavic 2 Zoom retails for US$1,299, which is in the range of more serious consumer digital cameras.

For that you get a remote-control quad-copter drone with a 12-megapixel camera with 2x optical zoom and full gimbal movement. The Mavic 2 weighs about two pounds, its four rotor arms, each with its own little electric motor, twist away for compact storage, and it can fly for about half an hour on a charge of its removable 60 watt-hour battery. The remote controller works in conjunction with an iPhone or Android smartphone, which mean you'll see live video right on your phone, and it can even live-stream it to social media.

Now lest you think drones like the Mavic 2 are still just toys, this thing can go as fast as 45 miles per hour, it can go as high as 1,600 feet, it can be up to five miles away from the controller, and it has a (battery-limited) flying range of about 11 miles.

Thanks to GPS and numerous sensors, the Mavic 2 drone also has amazing smarts. It can follow preset GPS coordinates/waypoints. And it recognizes obstacles and will navigate around them. It has all sorts of programs, like following the pilot, fly circles around a still or moving object, do special effects, and so on. And it also has a bunch of LEDs so it can be seen in bad light, and also to communicate status.

How difficult is it to control a drone like the Mavic 2? That depends on one's dexterity and how much learning time is invested. Any video game player will be right at home with the controller. There are different flight modes, including a restricted beginner mode. There are helpers such as auto-launch, and also auto-return and auto-land. What perhaps is most amazing is how rock-solid the Mavic 2 is in the air. If you let go of the controls, it sits still in the air as if on a tripod. Even modest wind doesn't faze it. You can leisurely peruse the scenery from high up there, looking any which way you want.

But now let's look at what all that means for field workers and the typical users of rugged mobile computers.

Quite obviously, being able to control a camera that can fly has enormous potential. It can easily go anywhere and anyplace where ladders, scaffolds, and potentially dangerous trekking would otherwise be needed. That makes it ideal for inspections, damage assessment whether it's the big picture from way above or from very close-up. It can be used to take breathtaking video of real estate, natural settings, bridges, tall buildings and plenty more. It can go inside of structures that may be unsafe. The application potential is endless.

Sounds almost too good to be true, doesn't it. But it's all real, and it's only going get to get better and ever more sophisticated. The small 12-megapixel camera not enough? For a mere $200 more, DJI offers the same quadcopter setup with a 20-megapixel Hasselblad camera. Need a bigger drone with some payload capacity? They are available. Need a bigger screen? You can hook the controller up to a tablet. Is the screen not bright enough? DJI offers a controller with its own sunlight-viewable display.

And then there are the laws and regulations. Even for a small drone like the DJI Mavic 2, the controller insists on downloading the latest regulations and restrictions before each and every flight. If too close to a sensitive area, one may have to get permission to fly first. And anything that is considered commercial use of a drone requires a license. It's not terribly difficult to get, but still requires studying and passing a test. And depending where you are, the law of the land may make little legal difference between flying a drone and flying an actual aircraft.

That all said, it's easy to see that drones will likely become tools for the job for many field and other workers who now use rugged mobile electronics. So the sooner you start acquainting yourself with drone technology, the better off you are. -- Conrad Blickenstorfer, March 2019

Posted by conradb212 at 4:28 PM

January 24, 2019

How bright is your screen?

If you take a handheld computer or a tablet or a laptop outdoors and on the job, it's really important whether you can still see what's on the screen clearly enough to actually use the computer.

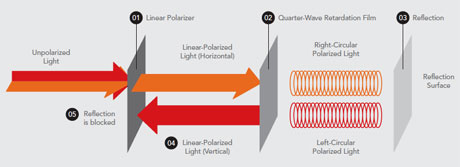

Whether you can or not depends on a lot of things, like how bright it is outside, whether there are reflections, the size of the screen, its sharpness and contrast, viewing angles, and so on. Experts calculate the "effective" contrast ratio in bright outdoor light by estimating sunlight compared to the light that's reflected back by the typical computer screen with its various treatments and several reflective layers. How well those internal reflections are controlled determines how readable the screen remains in sunlight.

With current display technology, of equal or perhaps even greater importance is the backlight. A strong backlight is generally better than a weak one, I say "generally" because a super-strong backlight can make a screen look washed out. That happens when the black pixels cannot block a backlight that's too powerful for a given screen technology. And, of course, a strong backlight drains the battery much more quickly.

Nevertheless, backlight strength is very important to outdoor and sunlight readability. But what exactly constitutes a "strong" backlight?

Quantifying light is never easy. Back in the day everyone knew that a 100 watt lightbulb was bright, a 60 watt so-so, and 40 watt was best used where you needed some but not a lot of light.

But that was before the short-lived era of spiral fluorescent light "bulbs" that were "100 watt-equivalent," and before the current era of LED lights that are also still sold by how many watts equivalent to an old incandescent bulb they generate. The brightness of LED bulbs is also stated in lumens and sometimes lux. Figuring out what lumens ("the total quantity of visible light emitted by a source") and lux ("a unit of measurement for illuminance") mean is too complicated to be useful in real life. And so for as long as people remember how bright a "real" 100 watt lightbulb was, newer technology light bulbs will probably sold as so and so many watts "equivalent."

What does all of that have to do with backlights? Not that much. Only that describing the strength of a backlight is just as complex and confusing as it is with light bulbs. So how is it handled?

In essence, the light emitted by a display backlight is given in a unit called candela per meter squared, or cd/m2. Candela is both the average light of one candle and, per Google dictionary, "the luminous intensity, in a given direction, of a source that emits monochromatic radiation of frequency 540 × 1012 Hz and has a radiant intensity in that direction of 1/683 watt per steradian." So there. Now take that per meter squared. Auugh.

Given this obvious complexity, someone in the industry at some point suggested calling cd/m2 simply "nit." So 100 cd/m2 became 100 nits. Maybe "nit" is short for "unit." No ones seems to know. Today, many display specs include a nits rating.

Even technically inclined folks often confuse luminance with illuminance. Illuminance is the amount of light striking a surface. Luminance is the brightness we measure off of a surface which is hit by light. So for our purposes, a light source behind the screen lights up the screen. How bright that light makes the surface, its illuminance, is measured in nits.

How bright is a nit? Or 100 nits? Since 100 nits is 100 candela per square meter, imagine a hundred candles sitting underneath a roughly 3 x 3 foot square. How bright would that be? I have no idea. So perhaps it's better to think of things that, on average, generate so and so many nits and go by that. Standard laptops generate about 200 nits. A good tablet or smartphone between 500 and 600 nits. Rugged laptops can generate as much as 1,500 nits, as can modern 4K HDR TVs.

What makes everything more difficult is where we view an illuminated surface. Even a 180 nits laptop can look bright and crisp indoors. Outdoors that same laptop would be barely readable. Outdoors the weather makes a big differences, as does being in the shade or under a blue or cloudy sky. And when it comes to competing with the sun, all bets are off. The sun generates between 10,000 and over 30,000 nits.

So for better or worse, to get an idea how bright the screen of a handheld, tablet or laptop is, look at its nits rating. Which, unfortunately, is listed only in a minority of spec sheets.

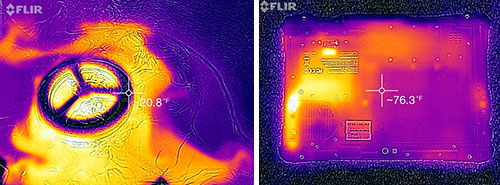

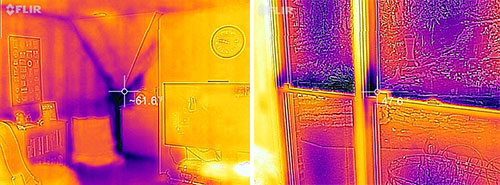

That's where a screen luminance meter comes in. The one we use here at RuggedPCReview.com has a range up to 40,000 nits and can show current or peak luminance of a display. We use it in conjunction with a test template to not only record maximum luminance in nits, but also nits readings in steps from black to white.

Until something better comes along, every handheld, tablet or laptop screen spec should include a nits rating. Customers need to know that before making a purchase decision. -- Conrad Blickenstorfer

Posted by conradb212 at 5:39 PM

September 13, 2018

Ditching laptops for tablets — evolution or disruptive paradigm shift?

We do product testing adventure trips two or three times a year. That means packing a lot of equipment, including chargers, memory cards of various sizes and standards, all sorts of cables and adapters, suitable software, batteries and their chargers, and whatever it takes to keep the whole thing running. At the center of it all, on every trip, was a laptop powerful and competent enough to handle whatever came our way.

Not this time. We spent two weeks in the central American nation of Honduras, on one of its channel islands, Roatan. Roatan, even now, remains wild and primal, and is, compared to almost every other such tropical destination, virtually untouched by tourism. Sure, there are small resorts here and there, but, by and large, there is no development. There is one road. No movie theater. No healthcare facilities to speak of. And this time we left the laptop at home. Instead, tablets and smartphones come with us. How did that work?

The jury is still out.

First I should say that we did still take a laptop with us, a small 11.6-inch Acer netbook. The little thing was the successor of earlier and even smaller Acer Aspire One netbooks that we’d used for years. Those, though powered by basic Atom processors, were amazingly useful. They easily handled all the many hundreds of photos we shoot on every trip, kept us in touch with civilization, and just generally did what we needed them to do. Initially, the larger little Acer had done even better, but that was several years of Microsoft “progress†ago. On this trip, the Acer, upgraded to the latest version of Windows 10, couldn’t get out of its own way it had become so slow.

Our big Office Apple McBook Pro, likewise, stayed at home. It’d become the victim of the “retina revolution†that had rendered its pre-retina screen unacceptably corse to our eyes. And it weighs a ton. Not really, but compared to newer and sleeker gear.

So this time it was all iPads and iPhones to handle all the writings, test results, observations, and all the video and still images we took both above and under water.

The big thrill: viewing your pics and video on the big 12.9-inch iPad Pro was glorious. It makes everything look even better than it actually is. And image and video manipulation apps have progressed to a point where you can do some tasks faster, better, and more intuitively than on a laptop. Tablets are also easier to take along, pass along, and stow away than laptops. So from that perspective we didn’t miss laptops at all. Oh, and sharing pictures and videos among ourselves and with newly-made friends es ever so easy with Apple AirDrop.

But not all was well. Copying from camera to tablet or phone was a total drag. Much of it was Apple’s fault for making it ridiculously difficult to use third party add-one. Like, a multi-card adapter with a lightening plug, specifically sold to work with Apple gear, was coldly rejected by our iPhones and iPads: “Peripheral not supported.†Another such device required downloading a special utility to work with the Apple gear and the documentation for that was marginal to an extent where a lot was lost in translation. Apple stuff tends to be simple to use, unless you have to use it with something non-Apple.

And, of course, iPhones and iPads have no card slots. So in this day and age of 4k video, even 256GB Apple gear quickly fills up. And then what?

Then there’s the app barrier. While tech pundits love to declare desktops and laptops dead and we’re all post-PC, that ain’t so. Most serious work is still done at the desk with something more precise than the tip of a finger. Which means there is a vast user-interface and experience gulf between how things are done on a tablet and how it’s done on the desktop. Things that take a minute on conventional equipment can take half an hour on a tablet, or it can’t be done at all. Or take typing, the bread and butter of reporting and creating content. No tippedy-tap finger typing can replace a good physical keyboard. And being limited to using the good-looking but amazingly stupid Apple onscreen keyboard is infuriating.

So it was a struggle. We spent way too much time uploading pictures and video, keeping track of it all on a non-accessible file system, using apps that sometimes worked and sometimes didn’t, or suddenly were no longer supported at all.

At almost any time we felt we were caught between two paradigms, that of how productivity used to work and that of the new world of billions of smartphones.

So the jury is indeed still out. Old gear simply does not work anymore. Microsoft, and technological progress in general, have seen to that. Brand-spanking new gear and software doesn’t work very well in the tropics and on adventure trips, because all that new stuff wants the internet constantly, and make it pronto and super fast, or else you’re dead in the water.

Being back in the office felt like a return to the past. Uploading pictures, using desktop software, cataloging files, fixing things. Many images and vids that had looked glorious full-screen on the big iPad suddenly looked much less impressive and sort of lost on our big iMac27 desktops where a million other things are open and vying for attention.

Is what we experienced the hallmark of disruptive technology? One that has not evolved smoothly from what was there before, but leaped ahead to another way of doing things? One that cannot be smoothly bridged no matter how you try. One that means leaving skills and methods and experience behind and start over? It does seem that way. And I say that as someone who uses both “old†and new every day. -- Conrad H. Blickenstorfer, September 2018

Posted by conradb212 at 6:21 PM

August 15, 2018

Why ruggedness testing matters

Ruggedized mobile computing gear costs more than standard consumer technology, but in the long run it often costs less. That’s because rugged computers don’t break down as often, they last longer, and there isn’t as much downtime. What that means is that despite the higher initial purchase price, the total cost of ownership of rugged equipment is often lower.

That, however, only works if ruggedized products indeed don’t break down as often, indeed last longer, and indeed do not cause as much downtime. Ruggedness, therefore, isn’t just a physical thing. It’s an inherent value, an implied promise of quality and durability. And that makes ruggedness testing so important.

Interestingly, ruggedness testing is entirely voluntary. While computers must pass stringent electrical testing before they can be sold, ruggedness testing isn’t officially required anywhere. It isn’t regulated. Electrical testing makes sure a computer adheres to standards, will not interfere with other equipment, and meet a wide range of other requirements. Why not ruggedness?

It’s probably because electric interference can affect third party equipment and systems, and possibly do harm, whereas ruggedness “only†affects the customer. And it’s also because unlike electrical interference standards that are absolutes, the degree of ruggedness required depends on the intended application. In that sense, the situation is similar to the automotive field where there are strict governmental testing requirements for safety and emissions (which affect third parties) but not for performance, comfort or handling (which only affect the customer).

Given the voluntary nature of ruggedness testing, how should it be conducted, and how relevant are the results of that testing?

For the most parts, testing is performed as described in the United States Department of Defense’s “Environmental Engineering Considerations and Laboratory Tests,†commonly known as MIL-STD-810G. In some areas, testing is done in accordance with a variety of different standards, such as IEC (International Electrotechnical Commission), ANSI/ISA and others. By far the most often mentioned is the MIL-STD-810G.

So what, exactly, is the MIL-STD-810G? As far as its scope and purpose go, the document says, “This standard contains materiel acquisition program planning and engineering direction for considering the influences that environmental stresses have on materiel throughout all phases of its service life.†Minimizing the impact of environmental stresses, of course, is the very purpose of rugged design, so using the MIL-STD-810G as a guide to accomplish and test that makes sense.

How does the MIL-STD-810G go about its mission? In the document’s foreword, it says that the emphasis is on “tailoring a materiel item's environmental design and test limits to the conditions that the specific materiel will experience throughout its service life, and establishing laboratory test methods that replicate the effects of environments on materiel, rather than trying to reproduce the environments themselves.â€

The MIL-STD-810G is huge and often very technical, as you’d expect from a document whose purpose is to provide testing for everything that may be used by the US Department of Defense. The operative term, therefore, is “tailoring.†Tailoring the tests to fit the conditions a specific item or device may encounter through its service life. That means it’s up to manufacturers to knowledgeably pick and choose the tests to be performed.

It also means that simply claiming “MIL-STD-810G approved†or MIL-STD-810G certified†or even “designed in accordance with MIL-STD-810G†means absolutely nothing. Not even “passed MIL-STD-810G testing†means anything unless accompanied by an exact description what testing was performed, to what limits or under what conditions.

So how do we go about ruggedness testing that truly matters? First by determining, as the MIL-STD-810G suggests, the conditions that a specific device will experience in its service life. Let’s think what could happen to a rugged handheld computer.

It could get dropped. It could get wet. It could get rattled around. It could be exposed to saltwater. It could get crushed. It could be used in very hot or very cold weather. It could get scratched. It could be used where air pressure is different.

That’s the important stuff. There may be other environmental conditions, but for the most part, this is what might happen to a handheld. And it must be able to handle those conditions and events while remaining functional.

It’s instantly obvious that it’s all a matter of degree. From what height can it fall and survive? How much water can it handle until it leaks? How much crunching force before it cracks? How hot or cold can it be for the device to still work? How much vibration before things break loose? And so on. It’s always a matter of degree.

So how does one determine the degree of ruggedness required? Deciding that requires thinking through possible use scenarios and then, applying common sense, arrive at the appropriate level of protection. Too little and it may break, which is bad for both the customer and the reputation of the manufacturer. Too much and it may become too bulky, heavy and expensive.

Ruggedness is a compromise. Nothing is completely invulnerable, freak accidents can and will happen, and figuring out how rugged is rugged enough is an educated judgement call, one based on knowledge and experience. Facilitating the desired degree of ruggedness is an act of balance and good design. And that, again, requires experience.

So how might one go about determining the proper degree of ruggedness? Here are some of the considerations:

A handheld computer will get dropped. It’s just a matter of time. What’s the height it may get dropped from? I am six feet tall. When I stand and use a handheld, the device is about 54 inches above ground, 4-1/2 feet. If it falls out of my hands while I use it, it’ll fall 4-1/2 feet to whatever surface I am standing on. That could be grass or a trail with pebbles and rocks on it, or anything between. And a user may be taller or shorter than I am. So one might conclude that if the device can reliably survive repeated falls from 5 feet to a reasonably unforgiving surface, such as concrete, we’d be safe. The device could, of course, fall so that its display hits a pointy rock. Or it could slip out of your hands while you’re standing on a ladder. But those are exceptions.

So now we check the MIL-STD-810G on how to conduct a test where a device is dropped from five feet to concrete, and, surprise, there is such a test. If the device passes that test, described in Method 516.6, Procedure IV, all is (reasonably) well.

But do consider that MIL-STD-810G Method 516.6 alone consists of 60 pages of math, physics, statistics, graphs, rules, descriptions, suggestions and rather technical language. Simply claiming “MIL-STD-810G†is not nearly enough. The device specs must describe the summary result, and supporting documentation should describe the reasoning, specifics, and sign-offs.

Now let’s look at another example. Sealing. How well can a device keep liquids from getting inside (which is almost always fatal to electronics)? Since rugged handhelds are used outdoors, they obviously must be able to handle rain. But that’s not enough. Working around water means that eventually it will fall into water. What degree of protection is reasonable? Given that most consumer phones are now considered waterproof, rugged handhelds should be as well. The question then becomes what degree of immersion the device can handle, and for how long.

Here again, much is common sense. No one expects a device to fall off a boat into a deep lake and survive that. But if it falls into a puddle or a shallow stream, it should be able to handle that. The most often used measure of sealing performance is IEC standard 60529, the IP Code. IP67, for example, means a device is totally sealed against dust, and can also survive full immersion to about three feet. Unfortunately, the IP rating is imperfect. Several liquid protection levels are qualified with a “limited ingress permitted.†Electronics cannot handle any degree of ingress. Amount of liquid, pressure of liquid, and time of exposure all matter. And what happens if a protective plug is not seated properly? So here again, specs should include the summary, with the exact testing procedure in supporting documentation.

I will not go through every single environmental threat, as the approach is always the same: What is the device likely to encounter? How is it protected against that threat? How was resistance tested? Who tested it? And what was the result?

Ruggedness testing is about common sense. If it is likely that a device will be used in an unpressurized aircraft, how high will that aircraft fly? Determine that and then test operation under that pressure.

If a device is likely to be used in very cold or very hot climates or conditions, determine how hot and cold it might get, then test whether it will work at those temperatures, for how long, and without an unreasonable drop in performance.

Test procedures for most environmental conditions can be found in the MIL-STD-810G or some other pertaining standards. I say “most†because some are not included. For example, I’ve often wondered why some rugged devices use shiny, gleaming materials that are certain to get scratched and dented on first impact. It will not affect performance, but no one likes their costly rugged device to be all scratched up after a week on the job. Scratch and dent resistance should be part of ruggedness testing.

The big picture is that serious, documented ruggedness testing, based on common sense and tailored for the device and application at hand, matters. The ability to hold up on the job is what sets rugged handhelds apart. Testing that ability is an integral part of the product, one that benefits vendors and customers alike. -- Conrad H. Blickenstorfer, Ph.D., RuggedPCReview.com

Posted by conradb212 at 7:18 PM

June 5, 2018

Make IP67 the minimum standard for rugged handhelds

Few outside of the rugged computing and perhaps a couple other sectors know what an "IP" rating means, or the specific significance of "IP67." Those inside those markets, however, cherish the designation for what it is — a degree of protection that brings peace of mind.

Really? Well, yes. If an electronic device is "IP67-sealed," that means neither dust nor water will get in. Specifically, there's "ingress protection" designed to keep solids and liquids out. Of the two, dust and water, it's primarily water that most are concerned about. Water as in rain, splashing, spilled drinks, hosing down, or going under. In essence, anything that is used outdoors is in more or less constant danger of coming in contact with water, and since water is unpredictable, one never quite knows how much water that will be, or where it'll be coming from. What we do know is that we'd rather not have water damage or destroy our expensive and necessary (and often irreplaceable) electronic gear.

Why is there a rating system? Because most sealing and protection isn't binary. It isn't either on or off. Sealing and protection come in degrees. Sealing a piece of electronic equipment means extra work, extra cost, and often some extra hassles, like more bulk and weight or less convenient operation. So sealing and protection should have minimal impact on cost and operation, and that is why they come on a sliding scale.

Which makes total sense. If a certain type of device will never be used outdoors it makes no sense to spend extra effort to make it dust and waterproof. If it'll never leave a vehicle, it doesn't really need to be protected against full immersion.

But if there is a chance that dust and liquids may be an issue, it still may make sense to consider the degree of protection needed. A laptop, for example, may get rained on, but it's not very likely to get dropped into a pond and few will want to hose it down with a high-pressure jet of water. It may be a different story for a vehicle mount designed to be used on tractors, scrapers, or bulldozers. Those are routinely hosed down, and if the panel computer mounted on them can be hosed down along with the rest of the vehicle, all the better.

What is most likely to get rained on or drop into a puddle or worse? That would be handhelds. Which means that handhelds should, by default, be sealed to IP67 specifications. IP67 means completely dustproof and also protected against full immersion in water down to about a meter, even if it should take half an hour to retrieve the device.

Why do I offer this suggestion? Because I've become aware of the considerable psychological impact of a device either being fully protected and it only offering some protection.

I've come to that conclusion based on many years' worth of rugged product testing and outdoor product photography. If a product is IP67-sealed, I know we don't have to baby it, we can sit in in a puddle or under a waterfall and it will be okay. If it's less than IP67, there is none of that confidence. It may or may not be damaged, and should water get in after all and destroy the product, then it's our fault because it was only designed for protect to some level.

And that makes all the difference in the world. It's the difference between you feeling you need to protect the product and keep it from harm, rather than being able to use it any which way you need to, without the extra stress of having to baby it.

For me, perhaps the biggest example of the huge difference between "sort of" protected and fully protected are products that I use every day of my life, no matter where I go. Those being my phone and my watch. In my case an iPhone and an Apple Watch. My early iPhones and my first Apple Watch were not waterproof, and I was keenly aware of that weakness, always. So I was hyper-aware of the devices never getting rained on or subjected to any contact with water. They probably could have taken it, but just the prospect of damage and then being lectured by some Apple "genius" that, hey, you didn't take care of your iPhone and the damage is not covered — not good.

Apple apparently realized that, and most other smartphone manufacturers did, too, and so iPhones and Apple Watches have been fully waterproof for some time now. The psychological impact is substantial. Both are really IP68-sealed, meaning they can handle even prolonged immersion. So water is simply no longer an issue.

I am sure the psychological impact of using ruggedized, but only partially protected, handhelds on the job is even greater. They are expensive, they must not fail on the job, and they can't always be babied. And accidents can and will happen.

Which, to me, means that all rugged handhelds that will be used outdoors should be IP67 at least. There may be devices so complex that this is not easily doable. But with most it is doable. The technology is there. So let's get this done whenever possible. IP67 should be the minimum standard for any industrial handheld that may be used outdoors or near liquids.

Posted by conradb212 at 5:46 PM

February 24, 2018

A look at Apple's HomePod

by David MacNeill

I am the target market for HomePod. It’s an all-Apple house, subscribed to Apple Music and iCloud Drive — we’re all in. You can AirPlay other music services and devices to HomePod, but it’s really designed for fanboys like me. Can I order corn flakes from Amazon with it? No. Would I ever want to do that? No.

As a speaker, it is not overly expensive. The sky’s the limit for audiophile-grade speakers so $349 is not outrageous. One aspect that no one has raised in the reviews is that you don’t need a pair of them to fill a room. Pro audio studio monitors like my Yamaha HS system ($400 for the pair plus another $400 for the matching subwoofer/hub) and computer speakers like my Mackies ($100) are always sold in pairs. You only need one HomePod per room. I say it’s the best home sound you can get for the money.

Siri control is very well done — you can talk to it from across the room and it just works, even if music is playing. There’s room for AI improvement, certainly. I asked Siri to play KBSU (local NPR news station) which it streamed perfectly, but when I asked “her†to play KBSX (sister classical station) she couldn’t do do it, saying it could not alter my cue while it is playing. I could not find a voice command to clear the cue. This only happens when streaming internet radio stations, not with music playlists — bug!

These are mere software issues, of course — the hardware is brilliant. We have entered the era of computational audio. Concepts like “stereo†and “surround 5.1†are obsolete. HomePod knows where it is, where the walls are, where the reflective surfaces are that might echo or create standing waves (the muddy sound you get when you place a speaker in a corner). It beamforms the center channel to be in the middle of your room, while ambient sounds mixed left and right go where you’d expect — it’s uncanny. This is the most three-dimensional speaker I’ve ever heard. There is no sweet spot, it’s just loud and clear and everywhere. And if you move HomePod, it recalibrates in seconds. HomePod has iPhone 6-level computing power making it the smartest in its class by a huge margin. That’s a lot of CPU potential — voice apps, anyone?

HomePod is Apple at its best. The thing does exactly what it says it will do, then gives you more than you expected. Best of all, Apple has zero interest in monetizing what you say to your HomePod. Nothing is stored, everything is encrypted end to end. It lives to serve and is totally trustworthy.

Posted by conradb212 at 8:39 PM

September 13, 2017

The impact of iPhones on the rugged handheld market

Apple has been selling well over 200 million iPhones annually for the past several years. This affects the rugged handheld market both directly and indirectly. On the positive side, the iPhone brought universal acceptance of smartphones. That accelerated acceptance of handheld computing platforms in numerous industries and opened new applications and markets to makers of rugged handhelds. On the not so positive side, many of those sales opportunities didn't go to providers of rugged handhelds. Instead, they were filled by standard iPhones. There are many examples where aging rugged handhelds were replaced by iPhones, sometimes by the tens of thousands. That happened despite the relatively high cost of iPhones and despite their inherent fragility.

By now it's probably fair to say that the rugged handheld industry has only peripherally benefitted from the vast opportunity created by the iPhone's paving the way for handheld computers. Why did this happen? Why did it happen despite the fact that iPhones usually don't survive a single drop to the ground without damage, despite that fact that only recently have iPhones become spill-resistant, despite the fact that iPhones need a bulky case to survive on the job, and despite the fact that their very design -- fragile, slender, gleaming -- is singularly unsuited for work on the shop floor and in the field?

One reason, of course, is that Apple is Apple. iPhones are very high quality devices with state-of-the-art technology. Apple has universal reach, excellent marketing and packaging, and massive software developer support. And despite their price, iPhones are generally less expensive than most vertical market rugged handhelds. Another reason is that creating a rugged handheld that comes even close to the capabilities of a modern consumer smartphone is almost impossible. Compared to even the larger rugged handheld manufacturers, Apple is simply operating on another level. The combined annual sales of all makers of rugged handhelds, tablets and laptops combined is only about what Apple alone sells in just over a week.

All that said, what can the rugged handheld market learn from Apple? Actually quite a bit.

Take cameras for example. The iPhone has almost singlehandedly obliterated the market for consumer cameras. People take pictures with their iPhones today, not with dedicated cameras. That's not only because it's convenient, it's also because iPhone cameras are quite excellent. They are state-of-the-art and have massive software support. Sending pictures from an iPhone to another phone, a computer, social media or anywhere else is easy. iPhone pictures and videos can easily be viewed on a big screen TV via AirPlay.

How important are cameras in smartphones? Important enough that the iPhone 7 and 7 Plus became hugely successful despite only modest overall improvements over the prior iPhone 6 and 6 Plus. The iPhone 7 Plus came with two cameras that seamlessly worked together, there was an amazingly successful "portrait" mode, and overall picture taking with an iPhone 7 Plus became so good that here at RuggedPCReview.com we switched all product photography and video from conventional dedicated cameras to the iPhone 7 Plus.

The same is again happening with the new iPhone 8 and 8 Plus. Relatively minor improvements overall, but another big step forward with the cameras. Both of the iPhone 8 Plus cameras have optical image stabilization, less noise, augmented reality features thanks to innovative use of sensors, stunning portrait lighting that can be used in many ways, and also 4k video at 60 frames per second, on top of full HD 1920x1080 video in 240 frame per second slow motion. And the new iPhone X ups the ante even more with face ID that adds IR camera capabilities and sophisticated 3D processing.